Terraform in Practice: Exploring the Trade-offs of Importing into Modules vs Resources in AWS

While completing the AWS Resume Challenge, I found myself deciding to import the console-created resources rather than destroying and redoing everything in IAC. This led me to try and find efficient ways to import resources into Terraform wherein after trying 3rd party tools, I found myself sticking with native Terraform methods. More of this in my previous blog Easily Import AWS Resources into Terraform.

For the project, I need to import an S3 Bucket, a CloudFront distribution, a DynamoDB table, a Lambda function, and an API Gateway. As I've gone deeper into importing the resources, I've found that it can be more complex than it seems on a high level.

One can simply run a terraform plan -generate-config-out=generated_resources.tf on the following and generate resource blocks accordingly.

import {

to = aws_s3_bucket.resume_bucket

id = "deployed_bucket"

}

import {

to = aws_cloudfront_distribution.cf_distribution

id = "FE1I5G3E0PD2L"

}

import {

to = aws_dynamodb_table.visit_counter

id = "tableName"

}

import {

to = aws_apigatewayv2_api.apigw

id = "5tebe15ge0"

}

import {

to = aws_lambda_function.lambda_update_count

id = "funcName"

}

As for how the values in the

idargument are acquired, it has to be manually retrieved so far. I suggest using the AWS CLI with commands such asaws cloudfront list-distributionswhich shows the Distribution ID for import.

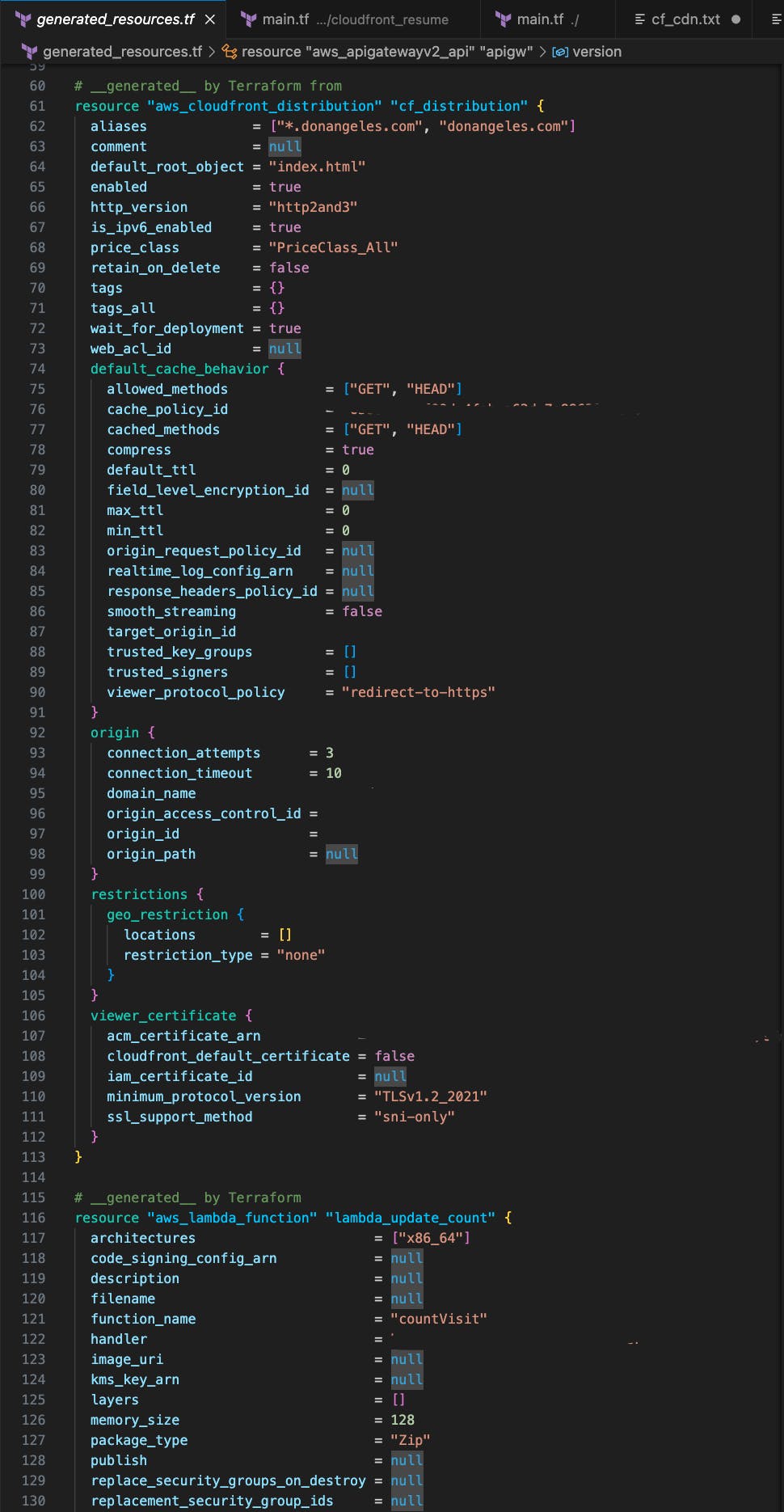

Looking at the output of the generated resource config like the one below, I thought that having a main.tf that looks out of order would not bode well for scaling/maintenance and simply, there were too many attributes that I did not need to declare. This thought made me wonder if I could import into modules instead where I could have a simpler main.tf and much of the configuration could be hidden away.

Is It Really Needed?

One of the reasons why I thought of using a module is that Terraform deprecated some attributes of resources like S3 to be a separate resource in itself. For example, versioning, which used to be part of the aws_s3_bucket resource is now recommended to be declared as a separate resource:

resource "aws_s3_bucket" "example" {

bucket = "example-bucket"

}

resource "aws_s3_bucket_versioning" "versioning_example" {

bucket = aws_s3_bucket.example.id

versioning_configuration {

status = "Enabled"

}

}

While I believe this is meant to provide a clearer structure and granular control for infrastructure management, I did not see the need to do so for this project.

In this case, using the module would simplify the above code like this:

module "resume_bucket" {

source = "terraform-aws-modules/s3-bucket/aws"

bucket = "deployed_bucket"

versioning = {

enabled = true

mfa_delete = false

}

}

Additionally, I thought that importing into a module would be better given that there are backend declarations of attributes such that once an import is made, it would automatically reflect in the state file without having to declare all attributes specifically. Which, after testing, was true for both importing into a module and into a resource block. However, I noticed that although importing into either a module or the resource block would yield relatively the same result, importing into a module generated a resource in the state file for the versioning setting of the bucket.

The following resource configuration was automatically generated by Terraform using the terraform plan -generate-config-out=generated_resources.tf :

# __generated__ by Terraform

# Please review these resources and move them into your main configuration files.

# __generated__ by Terraform from "123samplebucket"

resource "aws_s3_bucket" "sample_bucket" {

bucket = "123samplebucket"

bucket_prefix = null

force_destroy = null

object_lock_enabled = false

tags = {}

tags_all = {}

}

After running terraform apply it generated the state file with multiple attributes that were not declared in the above resource:

{

"version": 4,

"terraform_version": "1.5.2",

"serial": 1,

"lineage": "135fac88-248b-3a41-a8d8-21a7f81fcdf0",

"outputs": {},

"resources": [

{

"mode": "managed",

"type": "aws_s3_bucket",

"name": "sample_bucket",

"provider": "provider[\"registry.terraform.io/hashicorp/aws\"]",

"instances": [

{

"schema_version": 0,

"attributes": {

"acceleration_status": "",

"acl": null,

"arn": "arn:aws:s3:::123samplebucket",

"bucket": "123samplebucket",

"bucket_domain_name": "123samplebucket.s3.amazonaws.com",

"bucket_prefix": "",

"bucket_regional_domain_name": "123samplebucket.s3.us-east-1.amazonaws.com",

"cors_rule": [],

"force_destroy": null,

"grant": [

{

"id": "539ffa3dd050d0ee350875fa298bf05bd4b0739ac262de0021d2dfc572ce3fed",

"permissions": [

"FULL_CONTROL"

],

"type": "CanonicalUser",

"uri": ""

}

],

"hosted_zone_id": "Z3AQBSTGFYJSTF",

"id": "123samplebucket",

"lifecycle_rule": [],

"logging": [],

"object_lock_configuration": [],

"object_lock_enabled": false,

"policy": "",

"region": "us-east-1",

"replication_configuration": [],

"request_payer": "BucketOwner",

"server_side_encryption_configuration": [

{

"rule": [

{

"apply_server_side_encryption_by_default": [

{

"kms_master_key_id": "",

"sse_algorithm": "AES256"

}

],

"bucket_key_enabled": true

}

]

}

],

"tags": {},

"tags_all": {},

"timeouts": null,

"versioning": [

{

"enabled": true,

"mfa_delete": false

}

],

"website": [],

"website_domain": null,

"website_endpoint": null

},

"sensitive_attributes": [],

"private": "eyJlMmJmYjczMC1lY2FhLTExZTYtOGY4OC0zNDM2M2JjN2M0YzAiOnsiY3JlYXRlIjoxMjAwMDAwMDAwMDAwLCJkZWxldGUiOjM2MDAwMDAwMDAwMDAsInJlYWQiOjEyMDAwMDAwMDAwMDAsInVwZGF0ZSI6MTIwMDAwMDAwMDAwMH0sInNjaGVtYV92ZXJzaW9uIjoiMCJ9"

}

]

}

],

"check_results": null

}

This is more or less the same as importing into a module that retrieves a lot of resource configuration from the AWS resource. But the following shows the automatically created aws_s3_bucket_public_access_block resource in the state file after importing the S3 bucket the module:

{

"module": "module.sample_bucket",

"mode": "managed",

"type": "aws_s3_bucket_public_access_block",

"name": "this",

"provider": "provider[\"registry.terraform.io/hashicorp/aws\"]",

"instances": [

{

"index_key": 0,

"schema_version": 0,

"attributes": {

"block_public_acls": true,

"block_public_policy": true,

"bucket": "123samplebucket",

"id": "123samplebucket",

"ignore_public_acls": true,

"restrict_public_buckets": true

},

"sensitive_attributes": [],

"private": "bnVsbA==",

"dependencies": [

"module.sample_bucket.aws_s3_bucket.this"

]

}

]

}

The examples above show that there is a difference between importing into a resource block versus importing into a module from the registry. Using either will still let Terraform manage the resources but as to which one would be more advantageous would depend on the requirements of the system and more so the expertise of the developers. Using resource blocks allows for more granular control on the behavior of the resources and using modules simplifies the management of resources since they can encapsulate and abstract complex setups.

Using Modules for the Project

Initially, I tried to create my own modules but found that it could be very time-consuming. A separate directory has to be made where each folder would represent the resources and then, under each folder, would be the resources to declare, variables, and outputs. Also, I had to figure out dependencies of attributes of the resources, if any, and make multiple adjustments along the way.

.

└── modules

├── s3

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

├── dynamodb

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

└── ... #other modules

Fortunately, there's a community-maintained registry of AWS modules readily available. But, unfortunately, importing into modules was not as straightforward as I thought it would be. I did a lot of troubleshooting and needed some help along the way and eventually figured out that an index had to be explicitly declared when importing into a module. The full details of this hurdle, I outline here.

The following code shows how I imported my existing bucket into Terraform using the module.

import {

to = module.resume_bucket.aws_s3_bucket.this[0]

id = "deployed_bucket"

}

import {

to = module.resume_bucket.aws_s3_bucket_versioning.this[0]

id = "deployed_bucket"

}

module "resume_bucket" {

source = "terraform-aws-modules/s3-bucket/aws"

bucket = "deployed_bucket"

versioning = {

enabled = false

mfa_delete = false

}

}

Not as Simple as It Seems

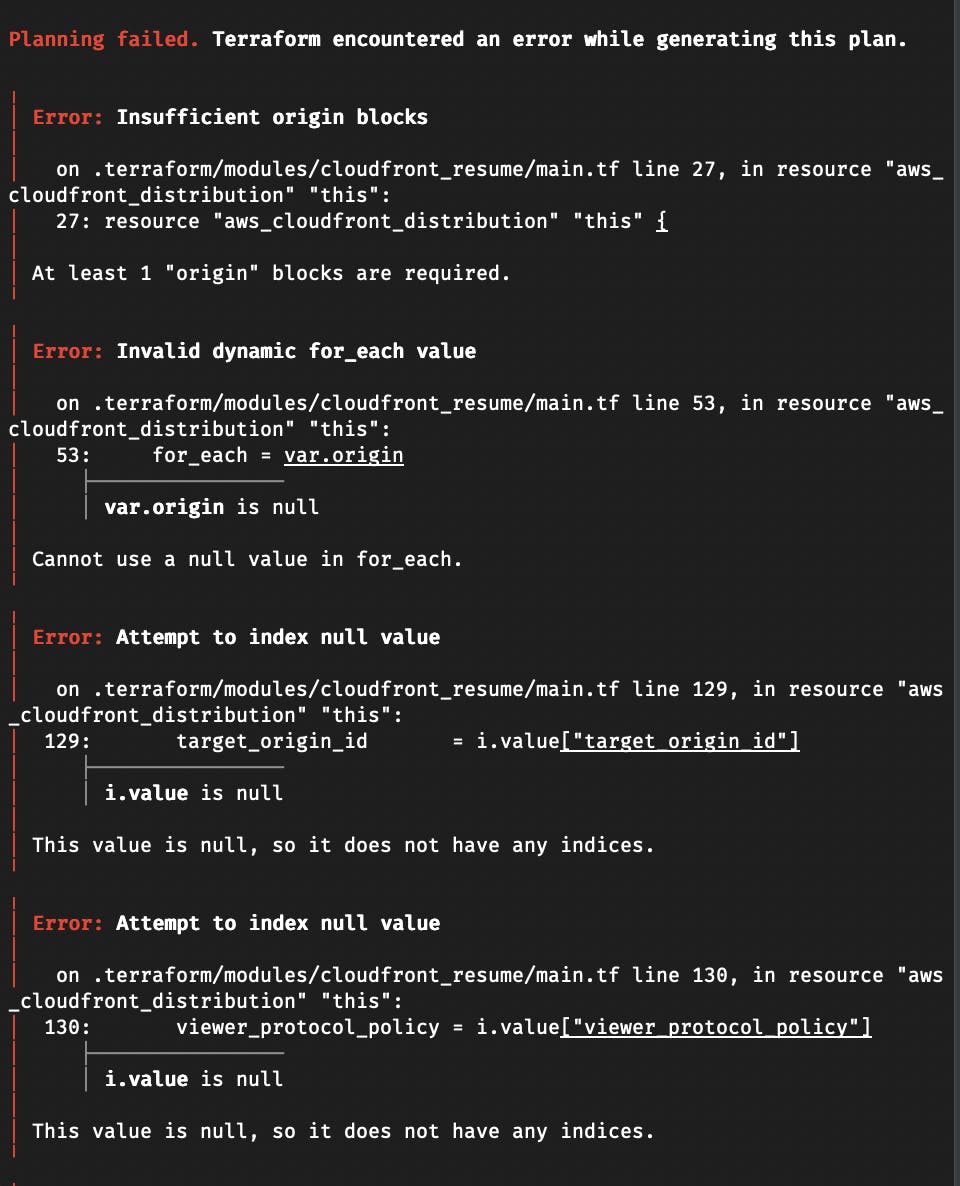

Since importing the S3 bucket was quite simple, the next resources would surely be as simple to declare and import. But again, I was wrong. For resources like CloudFront, I had to figure out which attributes were needed for proper import. Initially, I tried:

import {

to = module.cloudfront_resume.aws_cloudfront_distribution.this[0]

id = "ER0K5G3S0PD5R"

}

module "cloudfront_resume" {

source = "terraform-aws-modules/cloudfront/aws"

}

Which resulted in multiple errors:

I referred to the first generated resource blocks from the earlier plan command to compare with the errors and declared values for those arguments as in the following.

module "cloudfront_resume" {

source = "terraform-aws-modules/cloudfront/aws"

origin = {

content = {

domain_name = "deployed_bucket.s3.us-east-1.amazonaws.com"

origin_access_control_id = "F6UR0J1N6T39EC"

origin_id = "deployed_buckets3-website-us-east-1.amazonaws.com"

}

}

default_cache_behavior = {

allowed_methods = ["GET", "HEAD"]

cache_policy_id = "658327ea-f89d-4fab-a63d-7e88639e58f6"

cached_methods = ["GET", "HEAD"]

target_origin_id = "deployed_bucket.s3-website-us-east-1.amazonaws.com"

viewer_protocol_policy = "redirect-to-https"

}

}

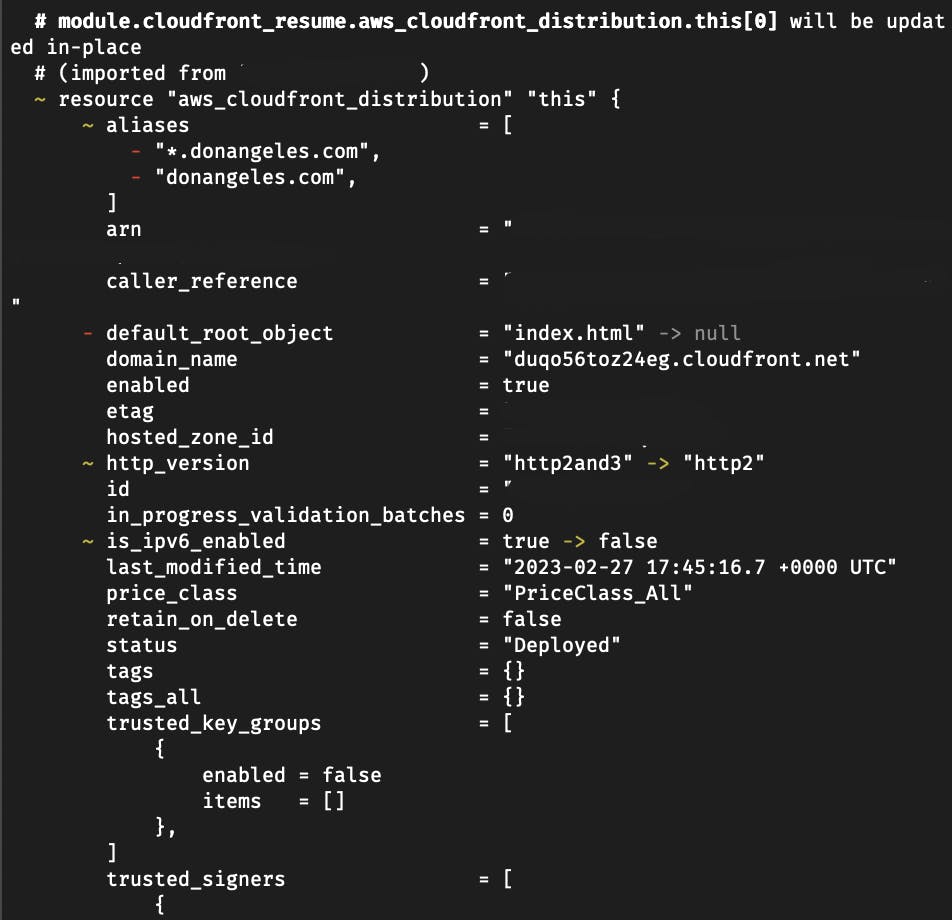

As it is, Terraform would already allow for the import but a close inspection into the plan output would show that some values will be modified.

This is definitely not ideal for a system that's already working as intended. Thus, even when simply importing resources into Terraform, utmost care has to be taken to ensure that unintended changes will not occur!

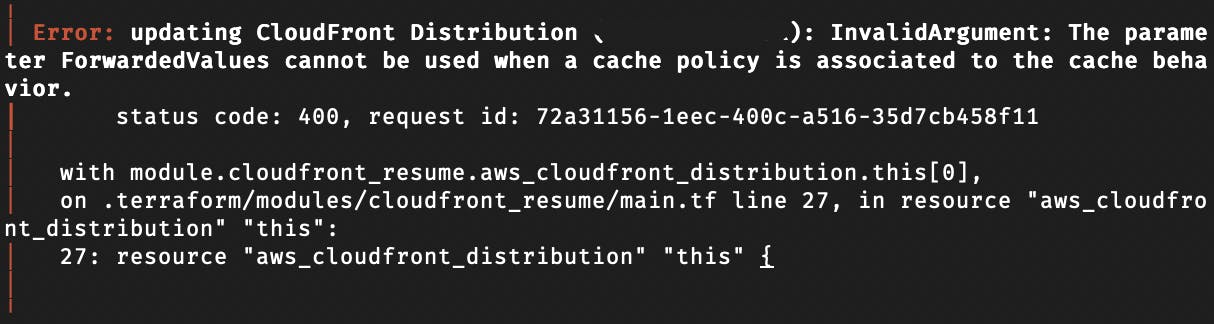

Additionally, premade modules have some default values for some attributes of resources which may hinder proper import or cause unintended behavior of resources. For example, with CloudFront, I received an error:

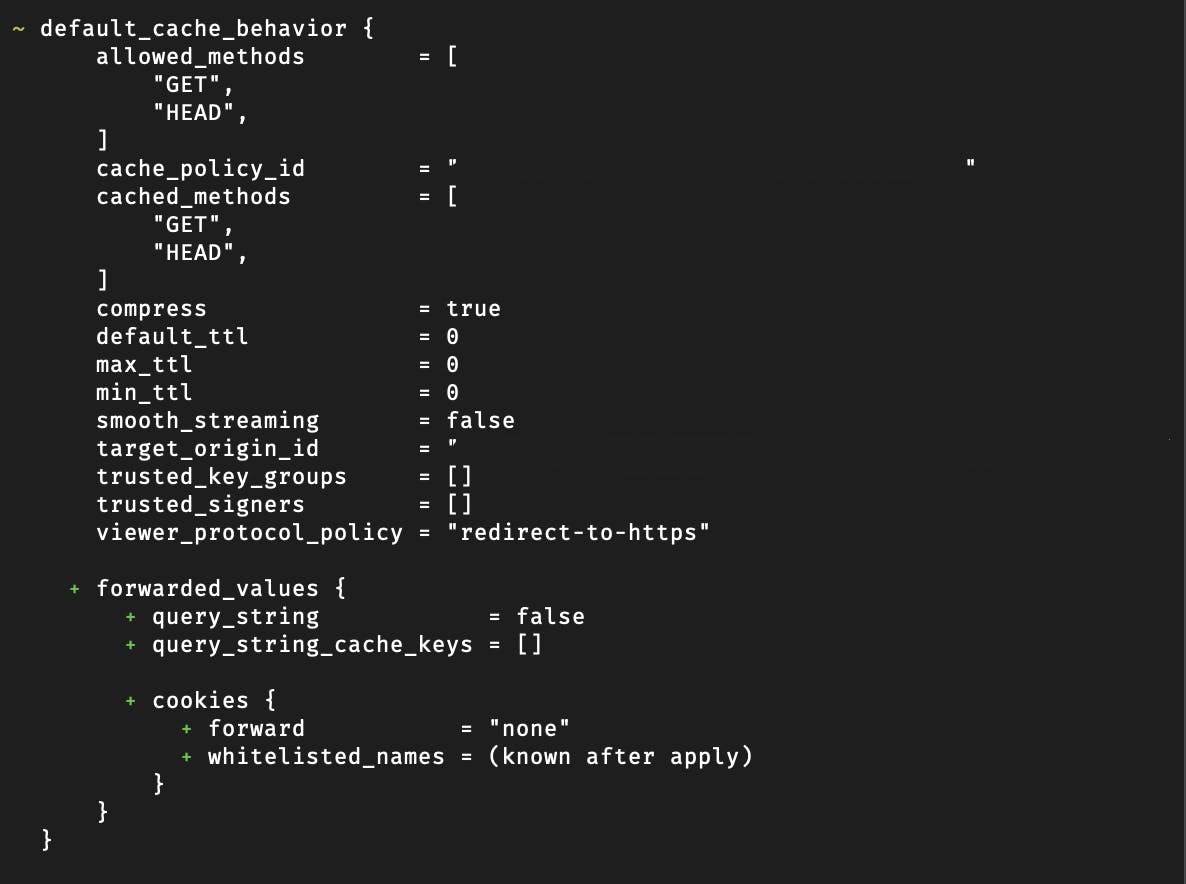

Because forwarded values were being created as they had defaults set.

Looking at the module's source code, use_forwarded_values can be set to false to prevent the attributes from being created upon executing terraform apply and successfully import the resource into management.

dynamic "forwarded_values" {

for_each = lookup(i.value, "use_forwarded_values", true) ? [true] : []

content {

query_string = lookup(i.value, "query_string", false)

query_string_cache_keys = lookup(i.value, "query_string_cache_keys", [])

headers = lookup(i.value, "headers", [])

cookies {

forward = lookup(i.value, "cookies_forward", "none")

whitelisted_names = lookup(i.value, "cookies_whitelisted_names", null)

}

}

}

There may be a time as well when it's just best to create a resource, plain and simple. Especially, when third-party modules are not working as intended. As I try to import my lambda function into the lambda module, I found myself encountering this error:

Even as I try to troubleshoot with many different configurations and delve deeper into the module's source code, I still can't figure it out. At this point, it's much more efficient in terms of time and effort to simply use the previously generated resource block and work my way from there to complete this section of the project.

Conclusion

Using modules versus individual resource blocks largely depends on the developers' expertise more than the complexity of the system to be managed.

My experience shows that although modules can make configurations visually simple, there may be settings that may become hurdles to successful management that would be a non-issue when simply importing into resource blocks. In the case of this project, it was not practical to spend too much time figuring out how to import resource that cannot be imported straightforwardly. However and surely, familiarity would eliminate unexpected behaviors from default settings and would unlock the efficiency of using them for cloud resource management.

Whichever one is used, what's for certain is that both require the developers to take care prior to applying the Terraform configuration.